The Scientific Computer System (SCS) was designed and implemented based on a user requirements study completed in 1986. The first version of SCS was certified in 1989 on the NOAA Ship MALCOLM BALDRIGE. The general requirements are summarized below:

The Scientific Computer System (SCS) was designed and implemented based on a user requirements study completed in 1986. The first version of SCS was certified in 1989 on the NOAA Ship MALCOLM BALDRIGE. The general requirements are summarized below:The Scientific Computer System (SCS) is a data acquisition and display system designed for Oceanographic, Atmospheric, and Fisheries research applications. It acquires sensor data from shipboard oceanographic, atmospheric, and fisheries sensors and provides this information to scientists in real time via text and graphic displays, while simultaneously logging the data to disk for later analysis. SCS also performs quality checks by monitoring I/O, providing delta/range checks and plotting data after acquisition.

The Scientific Computer System (SCS) was designed and implemented based on a user requirements study completed in 1986. The first version of SCS was certified in 1989 on the NOAA Ship MALCOLM BALDRIGE. The general requirements are summarized below:

The Scientific Computer System (SCS) was designed and implemented based on a user requirements study completed in 1986. The first version of SCS was certified in 1989 on the NOAA Ship MALCOLM BALDRIGE. The general requirements are summarized below:

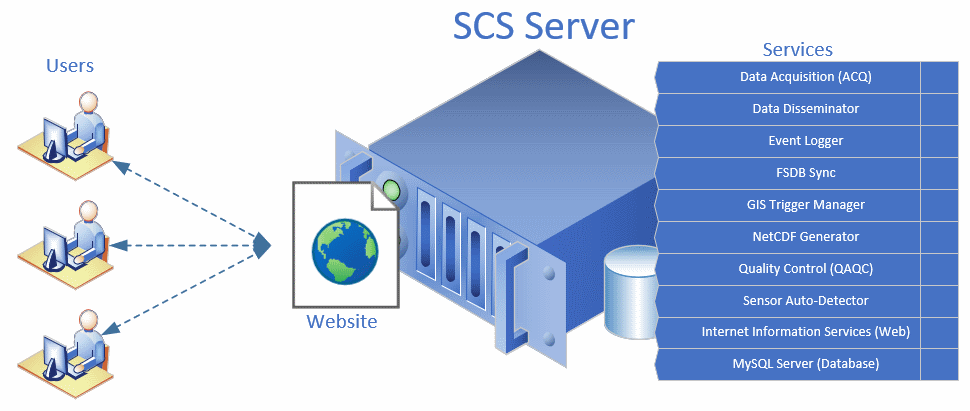

SCS provides this functionality through a set of programs that work in concert to acquire, log and display the data. The system is structured in such a way that each program performs a single function and may be run on independent servers. Most client interactions are conducted via a modern web browser. This simplifies the maintenance of the system while maximizing its flexibility, long-term use, platform independence and reliability by isolating user interface displays from mission critical data collection functions. The programs interconnect using a client/server architecture based on SignalR, Windows Communication Foundation (WCF) and various web technologies which allow the display interfaces to run remotely client computers with the real-time data being provided via SignalR from the acquisition computer.

If you have spare funds the best place to put them would be into additional RAM. The more memory you add the better performance you will get. Generally speaking disk speed/space and CPU on a generic server are sufficient for the demands of SCS however being able to utilize RAM for caching will yield the most benefit.

SCS is currently written using the .NET 4.8 Framework and requires a host capable of running it (Windows). Future versions of SCS will target the .NET Core framework instead, allowing for the server to be Windows, Linux or a variety of other operating systems, but as of the time of this writing the server must be Windows based.

Creating a NLB cluster to distribute the load and allow for scalability sounds ideal, however this is not our default setup in the NOAA fleet and has not been tested. With the future of the fleet moving towards virtualization and potentially even on-prem PaaS we will not be investigating this as an option.

Clients connect over the web, so any client with an up to date and modern web browser should work with SCS. SCS was designed to target clients with resolutions of around 1600x1200 but the UI is responsive and will adjust to smaller resolutions. While in theory it will work on mobile devices it has not been tested as of yet so UI difficulties may arise if accessed in this manner.

While the more resources you can allocate to the servers the smoother SCS will run, an expensive box is not a requirement. SCS can run inside a virtual machine hosted on a normal developer's laptop with minimal RAM and CPU. However, if you have a large load with a large number of sensors or high data rates you will run into latency and timeout issues if your machine cannot accommodate. If you have a nominal setup with only a few sensors and a few clients then a basic machine running Windows 10 will suffice. As with the recommended setup, adding more RAM to your machine will yield the best impact on performance.

SCS has been designed to be as client agnostic as possible both in terms of the client location (network wise) and client operating system. The ideal way to do this was to make SCS a web based application. General users who interact with SCS will do so over a modern web browser, this includes anonymous users, scientists and administrators alike. Of course the core of the system remains application based and is hosted on a centralized server. However, these applications have morphed into services instead and can run independent of any user's profile. This was done to alleviate the issues arising from HSPD-12 and other security measures forcing the use of MFA and requiring a user to be logged in to run SCS. The current SCS can run in the background whether anyone is actually logged into the server or not, and will automatically re-institute itself if the server is rebooted for whatever reason (such as when it's being patched).

All sensor data logged by SCS is saved into the backend database. MySQL was chosen as it performs decently well, has most the features needed and is freely available to all potential users of the software.

IIS is used as the web server to provide the website to remote clients and to host the SignalR hubs used to provide data feeds and inter-process/client communication. IIS comes as a standard part of Windows Server and an express version can be installed on Windows 10 if needed.

SCS is primarily using MySQL as it's data store. However it does write certain things to the filesystem, by default all these will be written into D:\SCS though that can be changed at installation time.

Of particular interest are:

| D:\SCS\ConfigurationState | System Folder containing information needed by CFE to maintain the working configuration and track changes. |

| D:\SCS\Data | Sensor data folder which houses NCEI dumps, the RAW files and the NetCDF exports extracted from the database |

| D:\SCS\Email | Location of any emails which are queued up waiting to be sent |

| D:\SCS\Logs | Primary [non-database] location of system logs |

| D:\SCS\MySqlData | Primary location of database files |

| D:\SCS\Services | Location of all SCS background services |

| D:\SCS\Backup | Backups of the database (excludes sensor data!) primarily involving templates |

| D:\SCS\Temp | Temporary SCS files |